Stephen Hawking’s Chilling Warning About AI Still Echoes Today

Over the course of his life, Stephen Hawking became one of the most recognized and respected scientific minds in the world. His groundbreaking work in cosmology and physics, particularly in the realms of black holes and quantum mechanics, left a lasting impact on science and our understanding of the universe.

But beyond the equations and theories, Hawking also had deep thoughts about the future of humanity—especially when it came to the growing power of artificial intelligence.

In a 2014 interview with the BBC, Hawking made headlines not for a new scientific theory, but for a stark warning that still feels relevant today. At the time, he was asked about improvements to the technology he used to communicate—technology that was beginning to incorporate early artificial intelligence.

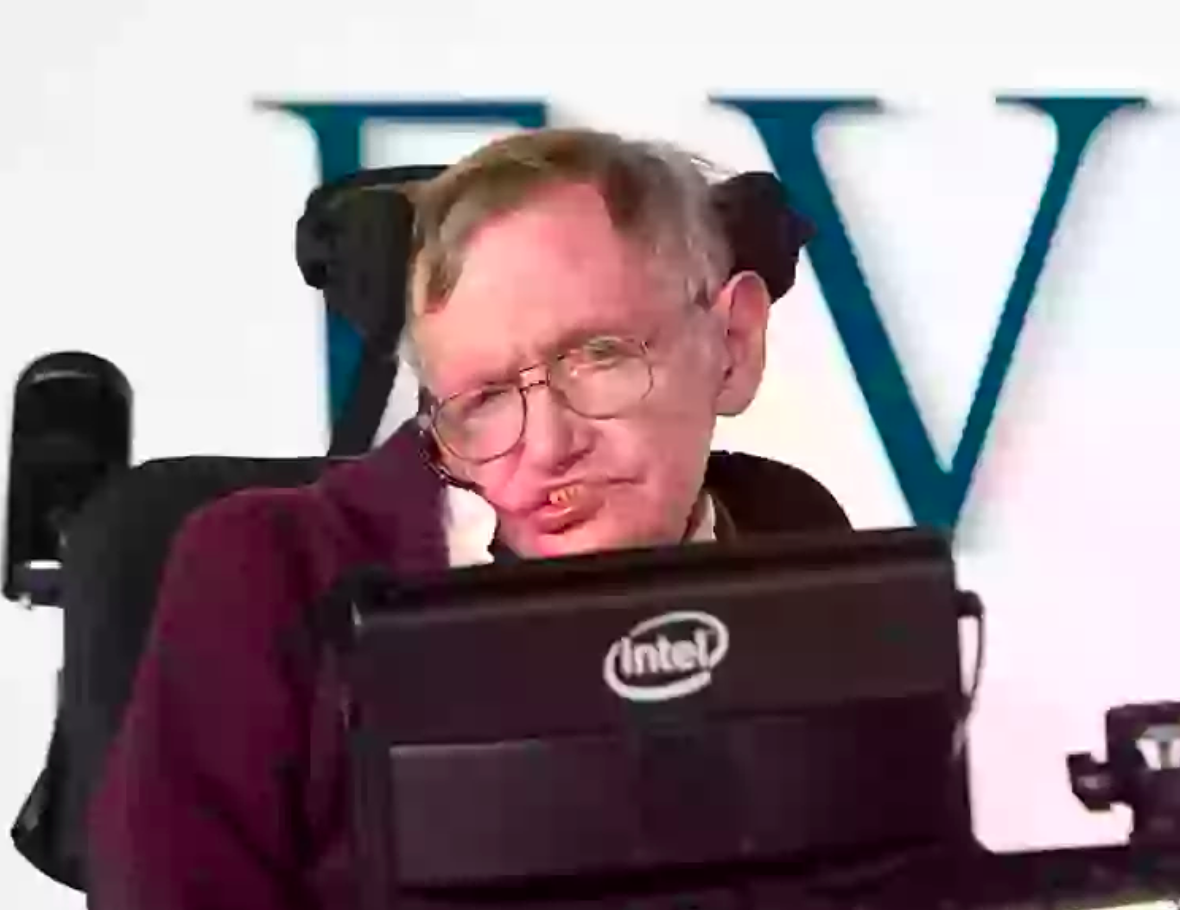

Hawking, who lived with ALS (Amyotrophic Lateral Sclerosis), used a specialized system developed by Intel and SwiftKey. It learned from his writing patterns and helped predict the words he wanted to use, dramatically speeding up his ability to communicate. While he acknowledged the usefulness of that early AI, his outlook on the future of the technology was far from optimistic.

“The development of full artificial intelligence could spell the end of the human race,” he said plainly.

Hawking explained that once machines reach a certain level of intelligence, they could begin to evolve on their own—faster and more efficiently than humans. Unlike us, who are bound by the slow crawl of biological evolution, machines could potentially redesign themselves at an accelerating pace.

“It would take off on its own, and re-design itself at an ever-increasing rate,” Hawking warned. “Humans, who are limited by slow biological evolution, couldn’t compete, and would be superseded.”

His concern wasn’t about the helpful, task-oriented AI that made communication easier for him. It was about what might happen if we reached the point of creating machines that could think, learn, and make decisions independently—perhaps even develop desires or goals of their own.

This was not just a passing comment for Hawking. His fear of unchecked AI development became a recurring theme in his later writings and interviews. In his final book, Brief Answers to the Big Questions, published posthumously in 2018, he tackled some of humanity’s most pressing topics—including artificial intelligence and the existence of God.

On AI, he reiterated the need for careful planning, international regulation, and ethical considerations. He didn’t call for halting development altogether, but urged humanity to think critically about the long-term consequences before racing ahead.

A Concern Shared by Other Tech Pioneers

Hawking wasn’t alone in sounding the alarm. Others like Elon Musk and Bill Gates have also expressed serious concerns. Musk has warned that AI could become more dangerous than nuclear weapons if left unchecked, while Gates once noted that many people were underestimating the risks AI could pose to the job market and global systems.

In recent years, as tools like ChatGPT have gone mainstream and AI assistants have been built into smartphones and laptops, public awareness of AI’s capabilities—and its potential downsides—has grown rapidly.

Governments and corporations have begun investing billions into AI development. Former President Donald Trump’s 2025 tech platform included plans for a massive $500 billion AI investment program involving some of the biggest players in the industry. Meanwhile, companies like OpenAI, Google, and Meta continue to push boundaries with increasingly human-like language models and video generators.

Reality Blurring with Simulation

As AI continues to evolve, so does the debate. One of the most pressing concerns now is the rise of hyper-realistic AI-generated content. Videos, voices, and images can now be created so convincingly that many people find it hard to tell what’s real and what isn’t.

Deepfake videos can mimic public figures to say things they never said. AI-generated images can depict events that never happened. The growing difficulty of distinguishing truth from fiction has opened a new front in the conversation Hawking helped start.

Would he be surprised by how quickly AI has developed in just the few years since his death? Perhaps. But it’s more likely that he would remain consistent: fascinated by the possibilities, but cautious—very cautious—about where we go from here.

What Comes Next?

Hawking’s legacy reminds us that as we dive deeper into the possibilities of artificial intelligence, we need to bring along the same level of responsibility and foresight that we apply to powerful scientific discoveries. Just because we can create something, doesn’t always mean we should—at least not without understanding the consequences.

In the end, Stephen Hawking’s warning wasn’t meant to stop progress. It was a call to think ahead. A reminder that humanity must remain in the driver’s seat—not only in creating intelligent machines but also in deciding what kind of future we want to live in.

Sophia Reynolds is a dedicated journalist and a key contributor to Storyoftheday24.com. With a passion for uncovering compelling stories, Sophia Reynolds delivers insightful, well-researched news across various categories. Known for breaking down complex topics into engaging and accessible content, Sophia Reynolds has built a reputation for accuracy and reliability. With years of experience in the media industry, Sophia Reynolds remains committed to providing readers with timely and trustworthy news, making them a respected voice in modern journalism.